The deployment of drones has revolutionized Search and Rescue (SAR) operations, offering unparalleled perspectives and rapid data collection in challenging environments. However, the true potential of these unmanned aerial systems (UAS) for SAR is unlocked when coupled with artificial intelligence (AI) for real-time analysis. The cornerstone of effective AI in SAR is high-quality drone data annotation—the process of labeling raw drone imagery and sensor data to train machine learning models to detect, classify, and track objects of interest, such as missing persons, vehicles, or debris. Without precise and comprehensive annotation, even the most advanced AI algorithms risk misinterpreting critical information, leading to potentially life-threatening delays or errors.

This guide explores the best practices for drone data annotation, specifically tailored for AI-driven SAR analysis, ensuring that AI models are trained with the accuracy and reliability necessary to save lives.

The Transformative Role of Drones and AI in Modern SAR

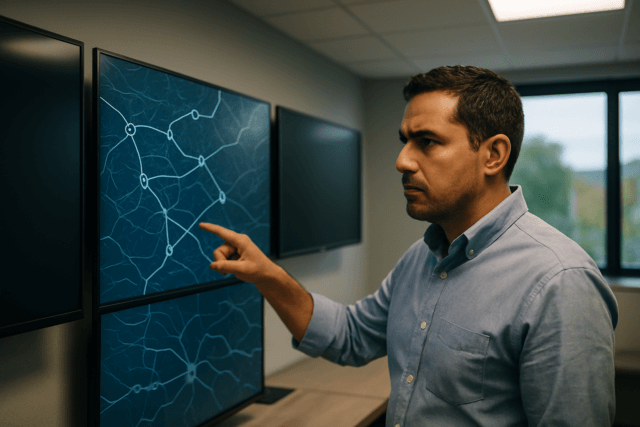

In disaster zones, remote wilderness, or urban search areas, drones equipped with various sensors can quickly survey vast terrains, access hazardous locations, and provide critical situational awareness to first responders. AI algorithms, trained on vast datasets of annotated drone data, can then automate the tedious and time-consuming task of analyzing this imagery. This automation significantly speeds up the identification of survivors, assessment of damage, and planning of evacuation routes, far surpassing human capabilities alone.

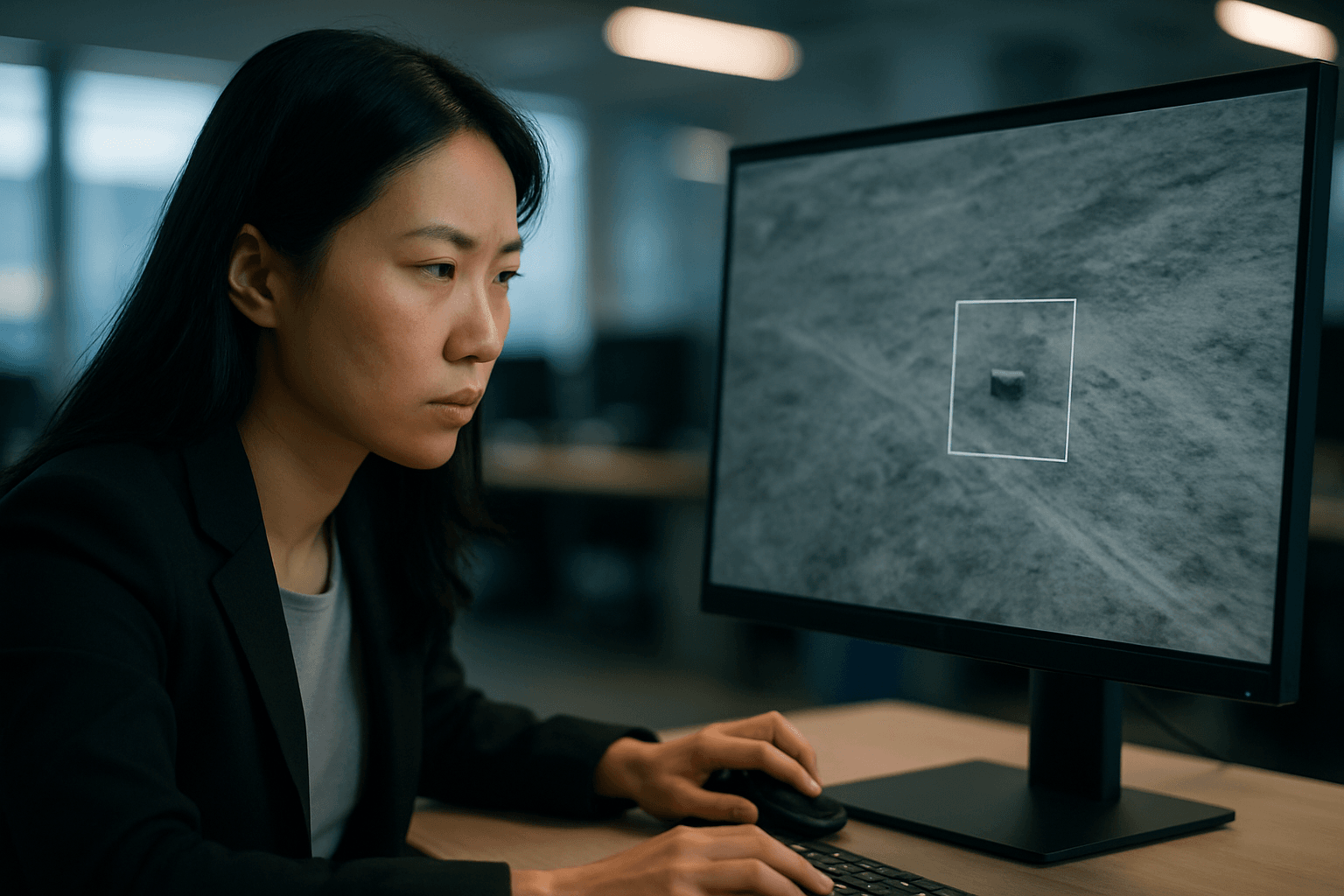

AI models can process data much faster than humans, scanning thousands of images and videos to identify patterns and anomalies that might indicate a person in distress or a critical hazard. This capability automates the process of identifying missing persons and provides valuable information about the surrounding area.

Understanding Drone Data Annotation for SAR Analysis

Drone data annotation for SAR involves meticulously labeling objects, features, and environmental conditions within the data collected by drones. This labeled “ground truth” data is then used to train AI models to perform tasks like object detection, classification, and segmentation autonomously.

Types of Data Collected by Drones in SAR

Drones deployed for SAR missions can carry a variety of sensors, collecting diverse data types crucial for comprehensive analysis:

- RGB Imagery: Standard visual photographs and videos, often high-resolution, used for general reconnaissance and object identification.

- Thermal Imagery: Infrared data that detects heat signatures, invaluable for finding people or animals in low light, dense foliage, or through smoke, where visual cameras are ineffective.

- LiDAR Scans: Light Detection and Ranging data creates precise 3D point clouds of the terrain and objects, useful for mapping complex environments and detecting subtle changes.

- Multispectral Data: Captures imagery across specific light wavelengths beyond human perception, often used in agriculture but also potentially for differentiating various materials or conditions in a SAR context.

- Synthetic Aperture Radar (SAR) Imagery: A form of radar that generates high-resolution images regardless of weather or lighting conditions, capable of penetrating clouds, smoke, darkness, and even dense foliage. This is particularly valuable when visual line of sight is obstructed.

Annotation Methods for SAR-Specific Objects

The choice of annotation method depends on the nature of the object and the AI task:

- Bounding Box Annotation: Drawing rectangular boxes around objects like people, vehicles, or debris. This is a fast method suitable for clearly defined objects.

- Polygon Annotation: Outlining irregular shapes with pixel-level precision, ideal for complex objects or areas like collapsed structures, bodies of water, or dense vegetation.

- Semantic Segmentation: Assigns a label to each pixel in an image, providing precise identification of various land features (e.g., ‘forest’, ‘building’, ‘water body’) or distinguishing between a survivor and their background.

- Keypoint Detection: Marking specific points on an object, such as joints on a human body, useful for analyzing posture or movement.

- 3D Box Labeling: Outlining objects in three-dimensional space, crucial for understanding drone imagery and object detection in 3D environments, especially with LiDAR data.

Why Accurate Annotation is Critical for AI-Driven SAR

The performance and reliability of AI models in SAR are directly proportional to the quality and accuracy of their training data. Errors or inconsistencies in annotation can have severe consequences.

Impact on Model Training and Performance

Clean, consistent, and context-rich annotations enable machine learning models to accurately detect and classify objects across different altitudes, speeds, and terrains. Poor annotation, conversely, introduces “noise” into datasets, leading to misclassification, failed object detection, or unsafe decisions by autonomous drone systems. Even minor inaccuracies in training data can cause significant performance issues for drones operating in safety-critical scenarios.

Reducing False Positives and Negatives

In SAR, false positives (identifying something as a person when it’s not) can waste precious resources, while false negatives (missing a person in need) can have tragic outcomes. High-quality annotation helps AI models develop a robust understanding of what constitutes a target, significantly reducing these errors. The precision of video annotation can reach up to 99.9%, boosting AI model accuracy.

Ethical Considerations and Human Trust

For SAR professionals to trust and effectively integrate AI tools, the systems must demonstrate consistent and reliable performance. Transparent and accurate annotation practices build this trust, ensuring that AI recommendations are dependable and ethical in critical, life-saving missions.

Best Practices for Effective Drone Data Annotation in SAR

Implementing best practices is crucial for creating high-quality datasets that power effective AI in SAR.

Define Clear Annotation Guidelines and Protocols

Standardization is key to consistency, especially when multiple annotators or teams are involved.

- Standardized Object Classes: Establish clear, unambiguous categories for all objects of interest (e.g., ‘humanvisible’, ‘humanpartiallyobscured’, ‘vehiclewreck’, ‘debrisfield’, ‘fireactive’). These should be developed in consultation with SAR experts.

- Edge Cases and Ambiguity Handling: Provide specific instructions for annotating challenging scenarios, such as partially visible objects, reflections, shadows, or objects in cluttered environments. Define rules for when an object is too obscured to be reliably labeled.

- Temporal Consistency: For video annotation, ensure objects are tracked consistently across frames, especially for moving targets.

Utilize Diverse and Representative Datasets

AI models perform best when exposed to a wide variety of real-world conditions they are likely to encounter.

- Varying Environmental Conditions: Include data captured in different weather (rain, fog, clear), lighting (day, night, dawn/dusk), seasons, and terrains (urban, forest, mountainous, coastal).

- Different Sensor Types: Train models on data from RGB, thermal, LiDAR, and SAR sensors to build multi-modal intelligence that is resilient to diverse operational challenges. SAR data, in particular, overcomes the limitations of optical sensors in adverse visual conditions like night, fog, smoke, or dense foliage.

- Diverse Poses and Appearances: For human detection, ensure the dataset includes people in various postures (standing, sitting, lying, injured), clothing, and backgrounds.

Employ Advanced Annotation Tools and Techniques

Leveraging sophisticated tools and techniques can significantly enhance efficiency and accuracy.

- AI-Assisted Annotation: Use tools that offer pre-labeling capabilities or actively learn from human annotations to speed up the process. AI-powered tools can automate parts of the annotation workflow.

- Specialized Annotation Types: Employ semantic segmentation for precise land cover classification or accurate object boundaries. For 3D data from LiDAR, use point cloud annotation or 3D bounding boxes.

- Multi-Modal Fusion: When annotating, consider layering and cross-referencing different sensor data (e.g., using SAR imagery to validate objects partially hidden in optical data under cloud cover).

- Geospatial Accuracy: Ensure annotation tools support GeoTIFF files and allow for precise GPS coordinate tagging, which is crucial for SAR applications like mapping and environmental monitoring.

Popular annotation tools include Labelbox, RectLabel, VGG Image Annotator (VIA), LabelImg, and SuperAnnotate. Platforms like FlyPix AI, DJI Terra, SimActive Correlator3D, and Skycatch also offer capabilities for processing and analyzing drone imagery, often with AI features.

Implement Robust Quality Assurance (QA) Workflows

Maintaining high data quality requires continuous vigilance.

- Multi-Stage Review Process: Implement a system where annotations are reviewed by multiple human annotators or subject matter experts.

- Inter-Annotator Agreement (IAA) Metrics: Quantitatively measure the consistency between different annotators to identify areas where guidelines may be unclear or training is needed.

- Feedback Loops: Establish mechanisms for annotators to provide feedback on guidelines and for QA teams to communicate common errors back to the annotation team for continuous improvement.

Leverage Subject Matter Expertise

Involve SAR professionals directly in the annotation process. Their understanding of operational realities and target characteristics is invaluable for creating accurate and relevant labels.

Data Security and Privacy

Given the sensitive nature of SAR operations and the potential for capturing personal information, ensure all data handling and annotation processes comply with strict privacy regulations and security protocols.

Challenges in Drone Data Annotation for SAR

Despite its benefits, drone data annotation for SAR presents several unique challenges:

- Complexity of SAR Environments: SAR operations often occur in dynamic, unpredictable, and cluttered environments, making it difficult to clearly delineate objects. Objects in aerial imagery can be irregular, overlapping, or widely distributed.

- Scarcity of Labeled SAR-Specific Data: High-quality, real-world SAR datasets, especially for specific disaster scenarios, are often scarce, costly, and time-consuming to acquire. Synthetic data generation is emerging as a solution to this challenge.

- Data Volume and Processing Power: Drones generate massive volumes of high-resolution data, requiring significant computational resources for storage, processing, and annotation.

- Speckle Noise in SAR Imagery: SAR data inherently contains “speckle noise,” which can complicate interpretation and annotation for AI models if not properly preprocessed.

The Future of AI and Drone Data in SAR

The future of AI-driven SAR analysis hinges on continued advancements in data annotation and AI capabilities. Emerging trends include the use of synthetic data to augment scarce real-world datasets, the integration of explainable AI (XAI) to build greater trust, and more sophisticated multi-modal fusion techniques that combine data from various sensors for a holistic understanding of the SAR environment. As technology progresses, AI-powered drone systems, underpinned by meticulously annotated data, will become even more integral to saving lives and enhancing the efficiency of search and rescue operations worldwide.