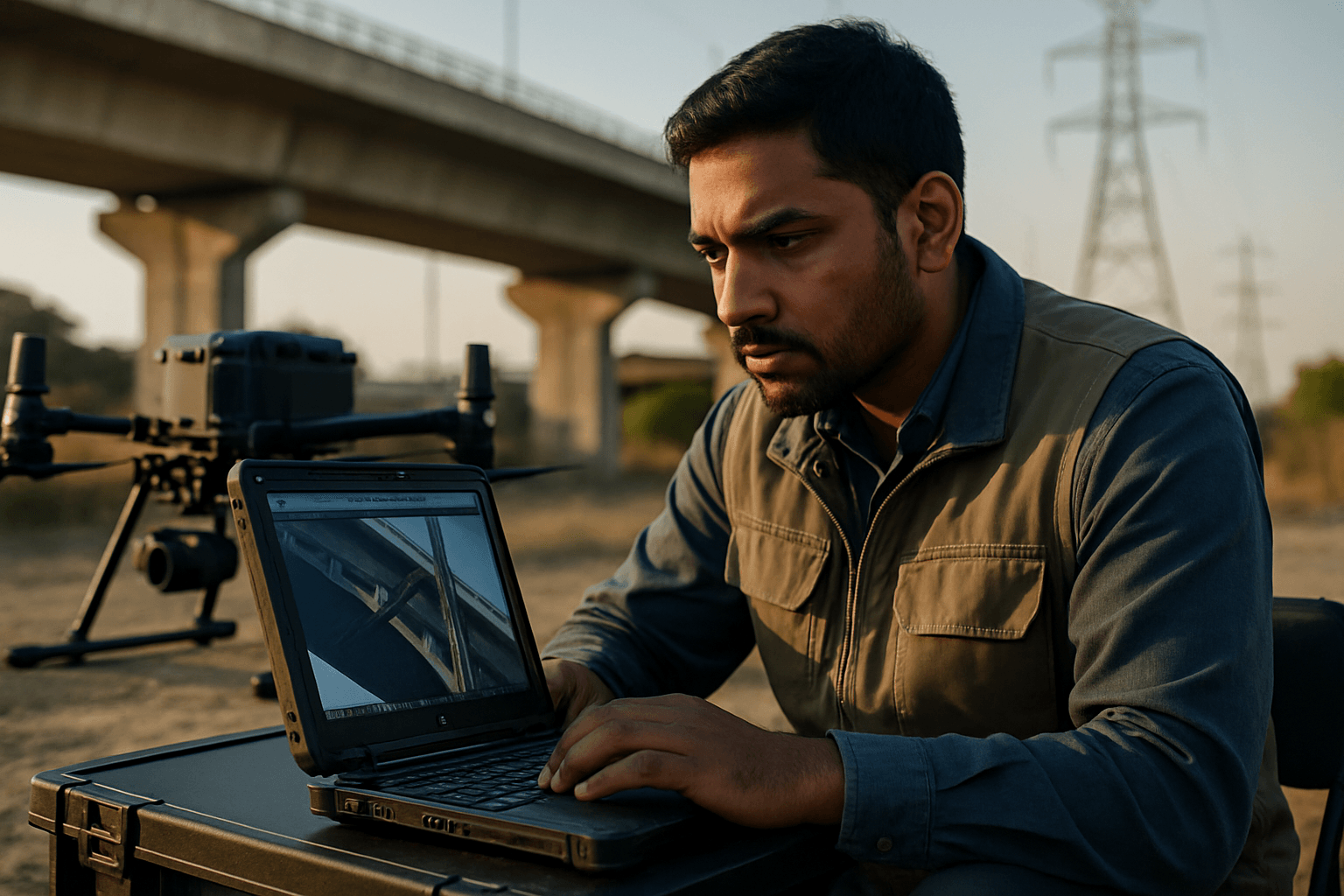

The skies above our cities and critical infrastructure are becoming increasingly busy with a new kind of worker: drones. These Unmanned Aerial Vehicles (UAVs) have revolutionized infrastructure inspection, offering a safer, more efficient, and often more cost-effective alternative to traditional manual methods. By leveraging high-resolution imagery, drones provide unprecedented access to hard-to-reach areas, enabling detailed assessment and proactive maintenance of vital assets like bridges, pipelines, power lines, and buildings. However, the true value of drone-based infrastructure analysis hinges on the quality of the captured data.

This guide outlines essential best practices for obtaining high-resolution imagery for infrastructure analysis, ensuring that the collected data is accurate, actionable, and ready for comprehensive assessment.

Selecting the Right Equipment and Sensors

The foundation of high-resolution imagery begins with choosing the appropriate drone and sensor payload for the specific inspection task.

Drone Capabilities and Sensor Types

Modern inspection drones come equipped with a range of sophisticated sensors beyond standard RGB cameras. These often include thermal cameras, LiDAR sensors, and multispectral cameras, each offering unique insights.

- RGB Cameras: These are fundamental for visual inspection, capturing high-resolution visible light images, often in 4K or higher, to provide detailed visual records. A minimum resolution of 12 megapixels, with 20 megapixels being ideal, is recommended for higher accuracy.

- Thermal Cameras: Critical for detecting hidden issues like heat loss, moisture intrusion, electrical faults, or inefficiencies not visible to the naked eye.

- LiDAR (Light Detection and Ranging) Sensors: LiDAR uses laser pulses to generate highly accurate 3D point clouds, capable of penetrating vegetation to map topography and provide precise measurements, even in low-light conditions. This makes it invaluable for detailed volumetric calculations and complex infrastructure modeling.

- Multispectral Cameras: These capture data across multiple spectral bands (including visible and near-infrared light), useful for assessing vegetation health or water quality, though less commonly the primary sensor for direct infrastructure structural analysis.

LiDAR vs. Photogrammetry: Choosing the Best Tool

While both LiDAR and photogrammetry can generate 3D models with centimeter-level accuracy, they operate differently and excel in different scenarios.

- Photogrammetry: Relies on overlapping photographs taken from different angles to create detailed 3D models with textures, colors, and realistic visual detail. It is generally more cost-effective as it primarily requires a high-resolution camera. Photogrammetry is ideal when high-resolution visual models and color information are paramount, and the site is open with minimal vegetation.

- LiDAR: An active remote sensing technology that emits thousands of laser pulses per second to measure distances, generating highly accurate 3D point clouds. LiDAR’s key advantage is its ability to penetrate dense vegetation and capture data in low-light conditions, making it superior for mapping complex terrains or areas obscured by foliage. While LiDAR point clouds are incredibly detailed, they lack the visual clarity and texture of photogrammetry models.

For complex projects, combining both LiDAR and photogrammetry is a common and complementary practice. LiDAR can capture areas that shadows or dense vegetation might obscure, while photogrammetry provides life-like context and visual detail to the LiDAR point cloud.

Meticulous Pre-Flight Planning

Effective drone inspection starts long before takeoff with thorough planning and preparation.

Defining Inspection Goals and Site Assessment

Clearly outline the objectives of the inspection. This dictates the most suitable drone, sensors, and data collection techniques. A comprehensive site assessment is crucial to identify potential hazards, obstacles, and areas of interest, such as power lines, high winds, or urban canyons that can block GNSS signals.

Developing a Comprehensive Flight Plan

A well-structured flight plan ensures efficient coverage, maximizes battery life, and minimizes risks.

- Flight Path: Use pre-programmed waypoints and systematic flight patterns (e.g., grid, concentric circles, or contour-following) to ensure complete coverage and consistency.

- Altitude: Optimal altitude is critical for image quality. For a 20-megapixel camera, flight heights between 165-190 feet (50-60m) are recommended, and no higher than 230 feet (70m). With a 40-megapixel camera, drones can fly between 328-393 feet (100-120m), with a maximum of 460 feet (140m). Flying lower generally improves mapping accuracy but increases flight time.

- Overlap: Crucial for accurate 3D models and image stitching, aim for at least 75-80% front overlap and 65-70% side overlap. Insufficient overlap can result in “NoData” holes.

- Uniform Distance: Maintain a consistent distance between the drone and the ground or the inspected object throughout the flight, accounting for terrain variations. Terrain-following tools in flight planning applications can assist with this.

Equipment Readiness and Regulatory Compliance

Before each flight, conduct thorough pre-flight checks. Ensure all batteries are fully charged and spares are available, propellers are free from damage, and drone and software firmware are updated. Obtain all necessary permits, licenses, and authorizations, including FAA approvals, property owner consent, and compliance with local regulations. Adhere to airspace rules, generally staying below 400 feet and maintaining visual line of sight (VLOS) with the drone unless specifically authorized for Beyond Visual Line of Sight (BVLOS) operations.

Optimizing Flight Execution and Environmental Awareness

The actual flight execution requires precision and an understanding of environmental impacts on data quality.

Stable Flight and Camera Settings

Maintain steady flight paths and speeds to minimize motion blur and ensure consistent data. A 3-axis gimbal is essential to keep the camera stable and level, preventing shaky or tilted images. Proper camera settings are also vital:

- Exposure Settings: Adjust for optimal image brightness and clarity.

- White Balance: Select the correct setting for accurate color representation.

- Shutter Speed: Choose an ideal speed for clear, sharp images, particularly important for moving drones.

- Focus Settings: Ensure sharp shots of the inspection target.

Environmental Factors

Weather and environmental conditions significantly impact image quality and data accuracy.

- Weather Conditions: Avoid flying in strong winds (greater than 7 meters per second or 15 mph), dense cloud cover, fog, haze, or rain, as these can reduce image quality and affect drone stability.

- Humidity and Dust: High humidity can affect sensor calibration, and dust can interfere with laser light emissions for LiDAR.

- Temperature: Extreme temperatures can affect drone operation and sensor accuracy.

- Obstacles: Be mindful of tall structures in urban areas (“Urban Canyon”) that can block GNSS signals and cause data inaccuracies.

Post-Flight Data Processing and Analysis

Once the imagery is captured, the next crucial step is processing and analyzing the data to extract actionable insights.

Processing Software and Accuracy

Specialized photogrammetry and mapping software suites are used to transform raw images into usable outputs such as high-definition orthophotos, Digital Surface Models (DSMs), Digital Terrain Models (DTMs), and 3D models/point clouds. Popular software options include Pix4D, DroneDeploy, Agisoft Metashape, and ArcGIS Pro.

To achieve the highest possible accuracy, especially for precise measurements and modeling, Real-Time Kinematic (RTK) or Post-Processed Kinematic (PPK) enabled drones are highly recommended. These systems provide centimeter-accurate positioning, reducing the need for numerous Ground Control Points (GCPs). For non-RTK drones, GCPs should be strategically placed every 490 feet (150 meters), while RTK-equipped drones may only need one every quarter mile (400 meters).

Leveraging AI and Machine Learning

The sheer volume of data collected by drones makes manual analysis challenging. Artificial intelligence (AI) and machine learning (ML) algorithms are increasingly used to process this data quickly and efficiently. AI can automatically identify patterns, detect anomalies, classify infrastructure defects, and extract valuable information from high-resolution imagery and thermal data. This not only streamlines the analysis process but also improves accuracy over time as the systems learn from new data, reducing reliance on human inspectors and minimizing errors. The integration of AI with drone imagery transforms raw data into actionable insights for preventative maintenance and informed decision-making.